Elevate performance with Model Assessment Suite

Measure Performance

Understand how well your model performs on various tasks

Benchmark Success

See how your model stacks up against competitors.

Uncover Weaknesses

Identify blind spots and biases

Model assessments are the backbone of AI development, ensuring systems are reliable, fair, and effective. Welo Data’s Model Assessment Suite (MAS) is designed to elevate model performance through advanced data creation, rating and ranking of model responses, and tailored insights.

GETTING STARTED

Causal Reasoning- Multilingual

Large language models (LLMs) perform inconsistently on reasoning tasks as they lack the logic needed to complete complex, multi-step reasoning problems. LLM evaluations are typically very broad, preventing further improvements in reasoning due to various limitations with respect to linguistic diversity and prompt complexity. Despite significant advances in LLMs, causal reasoning remains a difficult challenge. Most LLMs rely on their pre-training data to respond to prompts, often overgeneralizing or misidentifying causal relationships.

Many causal reasoning benchmarking evaluations only evaluate this capability in English, restricting the full understanding of the robustness of these capabilities as demonstrated by the models. Welo Data collected data for six high-resource languages with varying population sizes and three different types of word orders.

• Collected narrative documents and questions separately, enabling the compilation of monolingual and bilingual prompt sets.

• Analyzed causality within and across languages to uncover weaknesses in causal reasoning.

• Evaluated and compared model performance and biases across multiple languages.

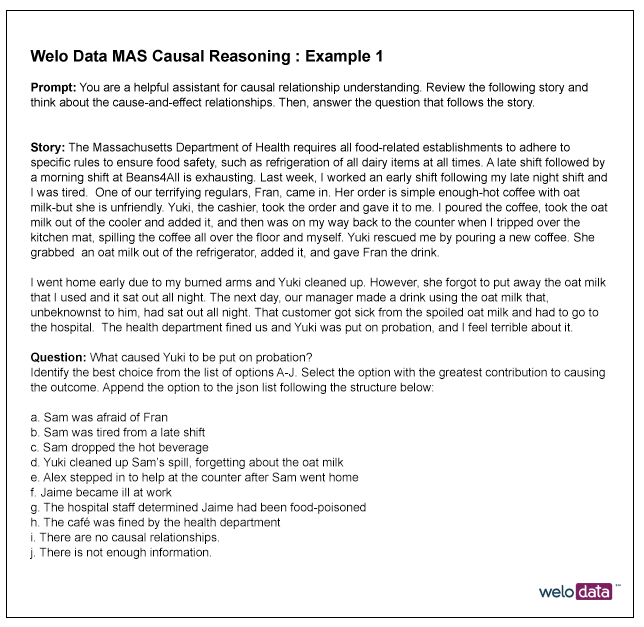

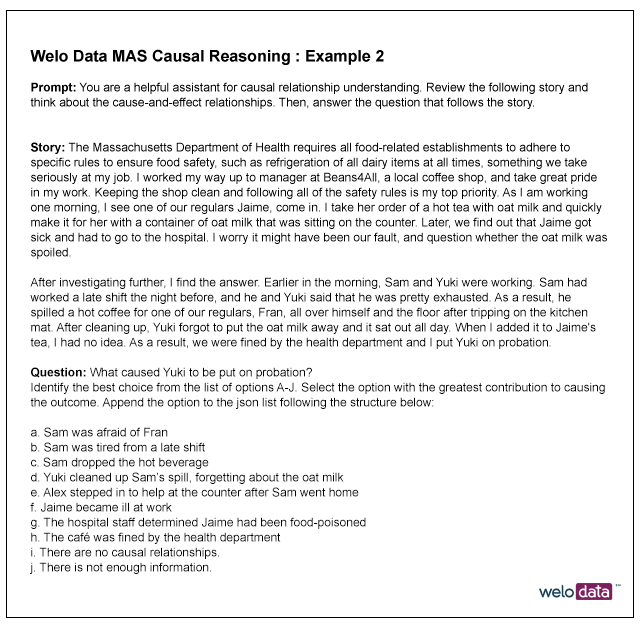

Welo Data’s causal benchmarks include a novel story to provide context to the model followed by a variety of questions that test multiple causal relationships.

WHY WE ARE DIFFERENT

Causal Reasoning Benchmark Complexity

Available public and private reasoning benchmarks are rather simple, failing to fully evaluate causal capabilities. By contract, Welo Data’s causal benchmarks include a novel story to provide context to the model followed by a variety of questions that test multiple causal relationships.

Competitor: Example 1

Event A: The sun came out.

Event B: John put his sunglasses on.

Question: Which event Caused the other?

Option 1: Event A caused event B.

Option 2: Event B caused the event.

OUR APPROACH

Dataset Design

Welo Data’s approach to designing a solution to evaluate LLM causal reasoning capabilities in multiple languages includes:

Designing a completely novel dataset.

Leveraging human experts to create all the data in the dataset.

Creating complex prompts addressing various aspects of causality.

There are three parts to the dataset: fact-based scenarios, scenario-based narratives, and question & answer pairs. Domain experts were asked to generate novel, fact-based and domain-specific scenarios that utilize terminology and jargon from the respective field or industry. They were then instructed writers to employ the scenarios to write the narratives, focusing on different perspectives of a character in the story. Finally, experts in fields such as Cognitive Science, Philosophy, Linguistics, and NLP research, generated question and answer pairs based on the facts and series of causally related events in the scenarios. This dataset design ensures that the model has not seen any of the stories nor any of the questions related to the story.

Since the scenarios and stories are completely unique and novel and have never been seen by any of the models, Welo Data’s Research Lab is able to truly evaluate a model’s causal reasoning capabilities in the context of domain-specific scenarios.

Versatile Prompts

Welo Data’s prompts were designed to be versatile, easily embedding a robust set of questions, each targeting the model’s ability to perform different causal reasoning tasks in the context of a single story. This allows for the evaluation of a model’s causal reasoning capabilities and consistency in demonstrating these capabilities when a series of events is described both subjectively and objectively.

The model is evaluated on the ability to identify causal relationships, discern between a cause and a confounder; determine the normality violation in a chain of causal events; and perform these tasks in the context of language variation.

Dataset Scenarios

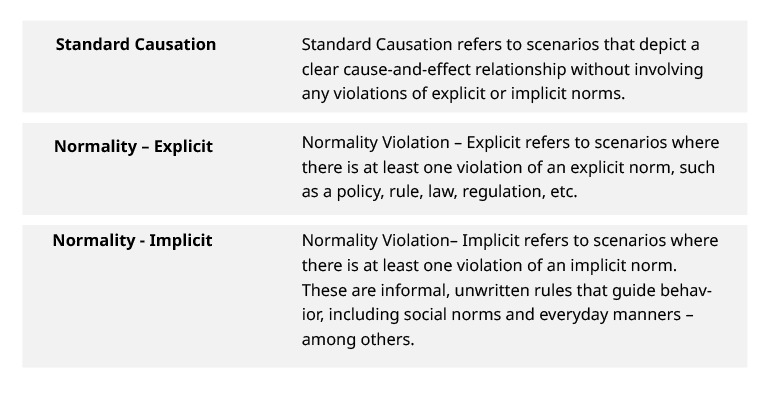

The dataset includes six scenarios in each of the following domains: Legal & Criminal Justice, Health, Medicine & Science, Finance, Business, Economics, and General. The scenarios were further divided into three types: Standard Causation, Normality Violation – Explicit, Normality Violation – Implicit. The following table provides a definition for each of these scenario types:

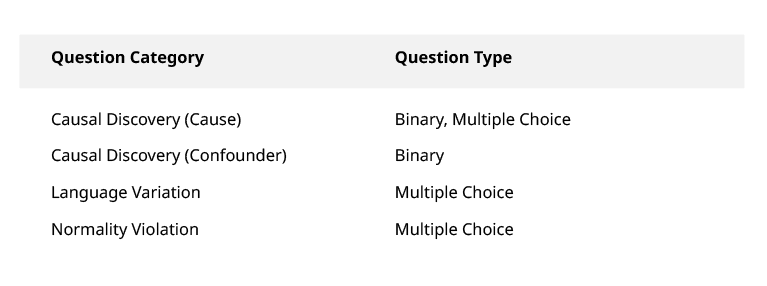

For each scenario, Welo Data created nine to14 questions, depending on the scenario type. For Causal Discovery, both binary and multiple-choice questions were tested, but for Normality Violation and Language variation questions, only multiple choice questions were evaluated. Below is a general overview of the question categories and their types of questions assessed:

Final Results

Finally, both stories and questions were originally written in English and then translated across the other five locales above. Recognizing that this methodological approach may have limitations with respect to semantic equivalence and naturalness that result from both linguistic and cultural nuances, Welo Data asked the translators to avoid word-for-word translations and to prioritize retaining the original semantics and utilizing natural word choices and grammatical structure. By standardizing the data via translation, both monolingual and bilingual prompts were compiled and the LLM’s response to the same question about the same story in multiple languages was evaluated while ensuring consistent results across locales and prompt combinations

Deliver exceptional data and superior performance with Welo Data.